- Product

- Solution for

For Your Industry

- Plans & Pricing

- Company

- Resources

For Your Industry

Pricing Intelligence vs Web Scraping Tools is one of the most misunderstood comparisons in modern data driven pricing. Both involve collecting competitive pricing data, but they serve very different purposes once pricing decisions begin to impact revenue, margin, and automation.

Many teams start with scraping because it feels flexible and low cost. Over time, data gaps, mismatches, and operational strain surface. This article explains the real differences, when each approach works, and how to choose the right model without costly rework.

Pricing intelligence is a system that turns market prices into trusted, structured, and actionable insights. It includes data collection, product matching, validation, governance, and delivery into pricing workflows. Unlike scraping, it is designed to support repeatable decisions at scale.

Pricing intelligence is often mistaken for data scraping with a dashboard. In reality, it is an operational layer that ensures pricing data is accurate, comparable, and usable across teams.

The reality gap appears when organizations expect raw scraped prices to behave like intelligence. Without normalization, error handling, and accountability, pricing teams spend more time fixing data than acting on it.

A pricing intelligence system typically includes:

Automated competitor monitoring

SKU and variant matching

Outlier detection and validation rules

Change tracking and historical context

Integration into pricing or analytics tools

tgndata operates as a validation and monitoring backbone in this layer, ensuring pricing signals remain decision ready rather than raw.

Web scraping tools extract raw data from websites using scripts or bots. They collect prices, availability, or metadata but do not validate accuracy, match products, or manage changes. Scraping outputs require significant downstream processing to become usable.

Web scraping tools are designed for flexibility and speed. They are excellent at answering questions like, what price is shown on this page right now.

The limitation emerges when scale and consistency matter. Websites change layouts. Products have variants. Prices differ by region, device, or logged-in state.

Scraping tools usually require:

Constant maintenance

Custom parsing logic per site

Manual product mapping

External validation processes

Use case example:

Situation. A small team monitors five competitors manually.

What breaks. As SKUs grow, scraped prices mismatch variants.

What changes. Errors increase, decisions slow.

Strategic takeaway. Scraping works for exploration, not operations.

Accuracy is the core differentiator between pricing intelligence and scraping. Intelligence platforms validate prices, detect anomalies, and enforce consistency. Scraping tools deliver raw outputs that must be checked manually or with custom logic.

Pricing errors do not fail loudly. They silently erode margin and credibility.

In scraping setups, accuracy depends on how well engineers anticipate edge cases. Missed selectors, wrong variants, or cached prices can flow straight into decisions.

Pricing intelligence platforms introduce:

Confidence scoring

Cross-source verification

Historical comparison

Alerting on anomalies

tgndata adds an additional monitoring layer that flags discrepancies before they affect pricing logic.

Use case example:

Situation. A retailer updates prices dynamically.

What breaks. Scraped prices include promotions not applicable to all users.

What changes. Validated intelligence filters misleading signals.

Strategic takeaway. Trust enables automation.

Product matching is the hardest part of pricing intelligence. Intelligence platforms normalize SKUs, variants, and bundles across retailers. Scraping tools typically leave matching as a manual or custom-coded task.

Two products with the same name are rarely the same offer.

Differences include:

Pack size

Regional SKUs

Private labels

Bundled accessories

Scraping tools treat each page as truth. Pricing intelligence treats each product as an entity.

Without normalization:

Comparisons are flawed

Price indexes mislead

Strategy decisions drift

Use case example:

Situation. Brand compares competitor pricing weekly.

What breaks. Bundles and multipacks inflate perceived discounts.

What changes. Normalized intelligence reveals true gaps.

Strategic takeaway. Matching accuracy defines insight quality.

Pricing intelligence platforms are designed to feed decisions, not just dashboards. They integrate into pricing engines, analytics tools, and workflows. Scraping tools stop at extraction.

Scraped data often lives in spreadsheets or ad hoc databases. Decision context is lost.

Pricing intelligence supports:

Rule based pricing

MAP compliance monitoring

Margin guardrails

Historical trend analysis

Feature → Benefit → Outcome table:

| Feature or Capability | Business Benefit | KPI Impact | Role Owner |

|---|---|---|---|

| Automated validation | Reduced pricing errors | Margin protection | Pricing manager |

| SKU normalization | Comparable insights | Price index accuracy | eCom analyst |

| Change tracking | Faster reaction | Time to repricing | SEO lead |

| Alerts and workflows | Operational efficiency | Conversion lift | Brand strategist |

The most common mistake is underestimating operational cost. Scraping seems cheap until accuracy, maintenance, and validation consume teams. Pricing intelligence reduces hidden costs.

Common pitfalls include:

Ignoring data drift

Overlooking compliance risks

Assuming scraping scales linearly

Treating pricing as a one-time analysis

Use case example:

Situation. Marketplace expands to new regions.

What breaks. Scrapers fail on localization.

What changes. Intelligence handles regional logic.

Strategic takeaway. Scale exposes hidden fragility.

Scraping tools make sense for research, experimentation, and low risk monitoring. Pricing intelligence is essential when pricing decisions are automated, revenue critical, or compliance sensitive.

Choose scraping if:

SKUs are limited

Decisions are manual

Errors are low impact

Choose pricing intelligence if:

Pricing updates are frequent

Margin matters

Teams rely on shared truth

Use case example:

Situation. Enterprise retailer automates repricing.

What breaks. Raw scraped data causes oscillations.

What changes. Intelligence stabilizes pricing logic.

Strategic takeaway. Intelligence enables confidence.

Pricing intelligence is used to monitor competitor pricing, validate market signals, and support pricing decisions at scale. It transforms raw price data into structured insights that teams can trust for automation, compliance, and revenue optimization.

Web scraping legality depends on site terms and jurisdiction. Even when legal, scraped data may be incomplete or misleading. Pricing intelligence platforms often mitigate risk by applying governance and validation layers.

Web scraping alone cannot replace pricing intelligence for operational pricing. Scraping lacks validation, normalization, and governance, which are critical for accurate and automated pricing decisions.

Without accurate product matching, price comparisons become misleading. Differences in variants, bundles, or SKUs can distort insights and lead to poor pricing decisions.

Update frequency depends on market volatility. Many teams monitor daily or intraday. Pricing intelligence platforms support continuous monitoring without manual overhead.

Pricing managers, ecommerce analysts, SEO leads, and brand strategists benefit most when pricing accuracy directly impacts margin, conversion, or compliance.

Pricing Intelligence vs Web Scraping Tools is not a tooling debate, it is a maturity decision. Scraping collects signals. Pricing intelligence transforms them into decisions that scale with confidence.

As pricing becomes faster, automated, and more visible, accuracy and governance matter more than flexibility. Platforms like tgndata serve as the operational backbone that ensures pricing data remains trustworthy as complexity grows.

We use cookies to provide you with an optimal experience, for marketing and statistical purposes only with your consent, which you may revoke at any time. Please refer to our Privacy Policy for more information.

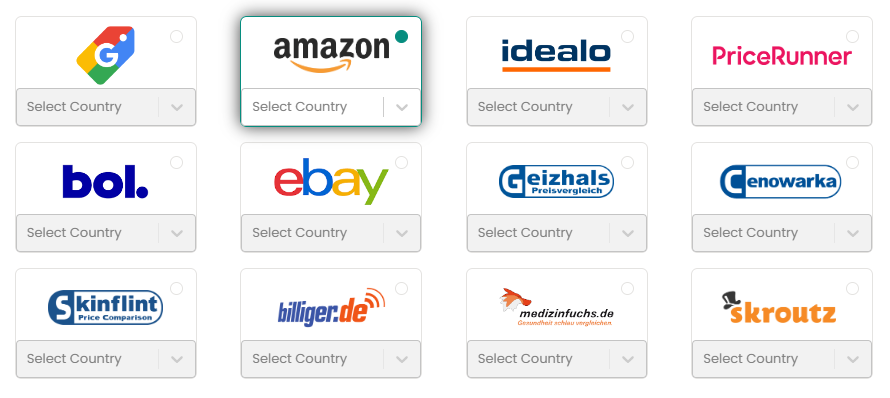

Missing an important marketplace?

Send us your request to add it!